What is AI Transparency?

In this blog post I will drill down into the concept of AI Transparency and how it relates to the constructs of Trustworthy AI and Ethical AI.

[Source: Dall-E plus some of my edits to the image]

Definition of AI Transparency

For me personally, the best definition of Transparency vis a vis AI is from the recently leaked copy of the European Union AI Act. It defines AI Transparency this way:

Transparency means that AI systems are developed and used in a way that allows appropriate traceability and explainability, while making humans aware that they communicate or interact with an AI system, as well as duly informing deployers of the capabilities and limitations of that AI system and affected persons about their rights.

Let me further define two words in this definition: Traceability and Explainability. Traceability means the ability to “track an AI’s predictions and processes, including the data it uses, the algorithms it employs, and the decisions it makes,” thus letting us determine how an AI system came to a particular decision.

Explainability is the ability to “describe an AI model, its expected impact and potential biases” and, in doing so, “helps characterize model accuracy, fairness, transparency and outcomes in AI-powered decision making.” Basically, both concepts address the need to peer into the “black box” of AI systems and help us better understand them and determine how they reached their decisions.

Obviously, Traceability and Explainability are important concepts for developers and deployers of AI systems, but I like how the concept of AI Transparency is also applicable to end users of those systems. We humans should know we are interacting with AI systems and we know our rights with respect to them.

With definitions out of the way, let’s take a look at how AI Transparency fits in the constructs of Trustworthy AI and Ethical AI.

Trustworthy AI

The field of AI ethics has emerged as a response to the growing concern regarding the impact of AI and the acknowledgment that while it can deliver great gifts, it could also represent a modern “Pandora’s box.” AI ethics is the “psychological, social, and political impact of AI.” Ethical AI aims to utilize AI in a lawful manner that adheres to a set of ethical principles that respects human dignity and autonomy, prevents harm, enables fairness, and is transparent and explainable. And it must meet a robust set of requirements from a technical and social perspective that helps ensure that AI performs safely, securely, and in a reliable manner that does not cause any unintended adverse impacts. The European Commission says that if an AI system meets this “overarching value framework” and takes a “human-centric approach” — i.e., designed, developed, and used for the betterment of humankind — then it can be considered “trustworthy.”[1]

The European Union has done a great job of defining a framework for Trustworthy AI. It has three components as shown in the diagram below. I highlighted where Transparency is both a key Ethical AI principle and a key requirement.

[Source: https://www.aepd.es/sites/default/files/2019-12/ai-ethics-guidelines.pdf]

The first core component of Trustworthy AI is to ensure that AI is built and implemented lawfully (i.e., Lawful AI). For example, in the US, this means adhering to anti-discrimination laws and regulations in housing and unemployment. The second component is Ethical AI, which is where Transparency comes into play and we will describe it in more detail below. The third component is robustness (i.e., Robust AI) which means it should cause unintentional harms. Let's drill down on Ethical AI and see how Transparency is a key principle and a key requirement.

Ethical AI: Key Principles

Laws are not always up to date with the rapid pace of technology development, so simply relying on if an AI system is legal does not mean it meets ethical norms or standards. The European Commission defines these core ethical AI principles as respect for human autonomy, harm prevention, fairness, and explicability (i.e., an AI system is transparent and explainable).[2] Let’s look at each of these core foundations of Trustworthy AI.

First and foremost, respect for human autonomy means that AI systems need to be human-centric. This means that AI systems need to be based on a “commitment to their use in the service of humanity and the common good, with the goal of improving human welfare and freedom.” In other words, AI systems need to be built for the betterment of humanity and to advance human well-being and dignity, i.e., they must enable humans to flourish. From there, they must respect the freedom and autonomy of humans. Humans should maintain self-determination over themselves, and AI systems should not treat humans merely as objects to be “sifted, sorted, scored, herded, conditioned, or manipulated.”[3]

The principle of harm prevention means that AI systems should not adversely affect humans from a dignity and mental and physical perspective. For example, AI systems should not cause asymmetries of power between employers and employees, businesses and consumers, and governments and their citizens. In addition, vulnerable populations such as children and the elderly need to be specifically protected.[4]

The design and implementation of AI should also be fair and treat groups equally and free from unfair bias. That being said, it is difficult to define and measure fairness. Fairness is a human, not a technological or mathematical determination. [5]

And finally, another core AI ethics principle is transparency and explainability. I talked above about “black box” algorithms that caused frustration when internal and external people could not figure out why businesses’ AI-based automated decision-making systems made the decisions they did. This principle requires the capabilities and purposes of AI systems to be clearly communicated and their decisions and any output to be explainable to those affected. Otherwise, it is challenging to question and contest a decision. Transparency and explainability (also referred to as “explicability”) are keys to building trust in AI.[6]

So, you can see that Transparency is a key principle of Ethical AI, but I will discuss below that it is also a key requirement.

Ethical AI: Key Requirements

Different individuals and organizations have identified key requirements for AI to be trustworthy that flow from the above four principles. I will highlight seven of them, and a few will deviate from the above diagram, but Transparency is a key requirement.[7]

The first requirement is human well-being. This requirement is based on the ethical principle of respect for human autonomy. This involves whether an AI system diminishes the deliberative capacity of humans, e.g., human oversight. It also includes informed consent, in that the AI system should allow humans to withdraw their consent.

The second is safety. This includes that the AI system must be resilient to attacks from hackers. In addition, AI systems should be designed so they cannot be misappropriated for a different use (e.g., delivery drones are weaponized). The AI systems should also be designed to have a “fallback plan” in that if the system goes awry, it should be able to self-monitor and correct or stop. Finally, AI should also protect the physical and mental integrity of humans.

The third is privacy. People should know how their data is used and consent to its usage. People should have the right to access their data, delete it, or correct it. Personal information should be protected and not sold or shared without consent.

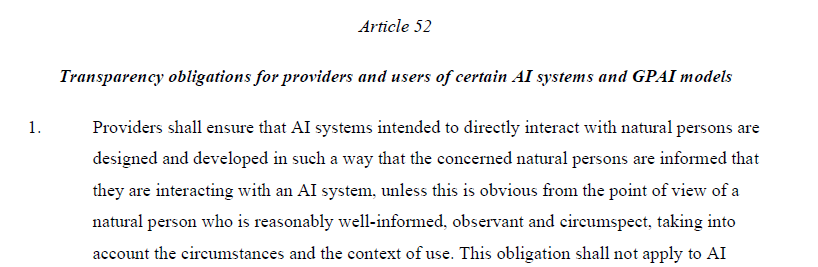

The fourth is Transparency. This requirement is linked to the principle of explicability. There needs to be transparency on how the AI-based system works and how it makes its decisions. The goal is to avoid the “black box” scenario where it is unclear how an AI system made its decision — those decisions should be traceable. Furthermore, for AI systems that mimic humans (e.g., a technical support chat interface), it should be clear that the consumer is interacting with an AI system versus an actual human.

The fifth is fairness. This requirement requires developers of AI systems to avoid AI bias, which is the unjustified differential treatment of or outcomes for different subgroups of people. Not surprisingly, this requirement ties in with the ethical principle of fairness.

The sixth is societal and environmental well-being. This ties in with the principle of preventing harm, not only to humans interacting with the AI system but to the broader society and sustainability of the environment.

The seventh and last is accountability. This links to the principle of fairness. It requires human oversight of AI, i.e., keeping the “human-in-the-loop,” and designates that humans have ultimate responsibility for any harms caused by AI. It also requires auditing in the form of algorithmic impact assessment that encompasses issues of fairness, explainability, and resilience to failure or hackers.

Summary of AI Transparency

AI Transparency is a broad term that is applicable to developers and deployers of AI systems, but also the end users. Furthermore, it is a key principle of Ethical AI (a key component of Trustworthy AI) as well as a key requirement.

In future blog posts, I will talk more about the California AI Transparency Act (CAITA), but I wrote this blog post in part to define the concept of AI Transparency at a high level so that readers can understand that CAITA in its initial form will address AI Transparency in the context of end users (i.e., consumers) versus developers or deployers of AI systems. For example, it will help consumers who interact with AI systems that mimic humans (e.g., a technical support chat interface) understand that they are interacting with an AI system versus an actual human. This is very much in line with Article 52 of the European Union AI Act, which provided the definition of AI Transparency I referenced above.

End Notes

[1] Emre Kazin and Adriano Koshiyama, “A high-level overview of AI ethics,” ScienceDirect, September 10, 2021, https://www.sciencedirect.com/science/article/pii/S2666389921001574 . European Commission, “Ethics Guidelines for Trustworthy AI,” April 8, 2019, https://www.aepd.es/sites/default/files/2019-12/ai-ethics-guidelines.pdf.

[2] European Commission, “Ethics Guidelines for Trustworthy AI,” April 8, 2019.

[3] Emre Kazin and Adriano Koshiyama, “A high-level overview of AI ethics,” ScienceDirect, September 10, 2021. European Commission, “Ethics Guidelines for Trustworthy AI,” April 8, 2019.

[4] European Commission, “Ethics Guidelines for Trustworthy AI,” April 8, 2019.

[5] European Commission, “Ethics Guidelines for Trustworthy AI,” April 8, 2019. Nicol Turner Lee et al., “Algorithmic bias detection and mitigation: Best practices and policies to reduce consumer harms,” The Brookings Institution, May 22, 2019, https://www.brookings.edu/articles/algorithmic-bias-detection-and-mitigation-best-practices-and-policies-to-reduce-consumer-harms/.

[6] European Commission, “Ethics Guidelines for Trustworthy AI,” April 8, 2019.

[7] The seven requirements I list in this section are an amalgamation of “Ethics Guidelines for Trustworthy AI” and “A high-level overview of AI ethics.” European Commission, “Ethics Guidelines for Trustworthy AI,” April 8, 2019. Emre Kazin and Adriano Koshiyama, “A high-level overview of AI ethics,” ScienceDirect, September 10, 2021.